Here is an overview and some notes from the Drupal Scalability and Performance Workshop I attended before the start of the DrupalCon conference that Ian and I are attending in San Francisco. As the title suggests, this workshop was focused on making Drupal (and web-applications in general) run fast. Really fast. I hope to apply the techniques learned in this workshop over the next weeks and months to make our sites run fast enough to handle any traffic load that might be thrown at them, even were an event to occur that would send major public traffic to our sites.

Read on if you are interested in the performance and scalability of Drupal, MySQL databases, and web applications in general.

After a two-hour overview of the various areas of application, server, database, and proxy configurations that can affect performance we spent much of the rest of the day deploying a basic Drupal installation on virtual machines and walking through the entirety of the discussed performance optimizations, running benchmarks at each stage.

Benchmarking

While many tools such as Apache JMeter can be used to build complex test plans that more accurately simulate loads seen in production, the Apache Bench (ab) tool is a very simple way to benchmark the effect a change has on the performance of a single page. This is critical for determining if a change that has performance implications increases or decreases the speed at which pages are served. Apache Bench can be run from the command line with arguments for the number of requests to make, the number of concurrent requests to run at the same time, and the URL of the page to test:

ab -n 1000 -c 50 http://host.example.edu/drupal/

As well, a cookie (copied from an authenticated session) can be used to measure the responsiveness for pages that require authentication:

ab -n 1000 -c 50 -C 'SESSd1e57edd95f34cfedb49c219e55ddf26=f35afe5e50caf15cba72c448afe72f81' http://host.example.edu/drupal/some/page/

Code Profiling

Once slow pages are identified, the location of performance issues can be determined by code profiling.

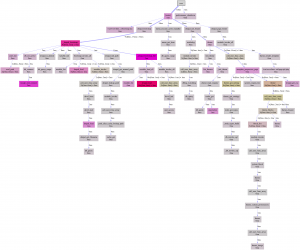

The FourKitchens wiki has a good page on how to profile Drupal code using XDebugToolkit and generate traces like the one below that help us developers to easily see where in the PHP code most of the processing time is spent.

MySQL Database Tuning

The first step in setting up a high-performing MySQL database server is to swap out the built-in InnoDB engine with the plug-in version from InnoBase (a subsidiary of Oracle). The InnoDB Plugin performs two or more times faster than the built-in InnoDB engine and is a fully compatible drop-in replacement. (Packages of the InnoDB Plugin for RHEL are available as part of the Remi YUM Repository). Other InnoDB engine implementations such as XtraDB from Percona add additional performance improvements and configuration abilities, but the InnoBase InnoDB plugin is itself a big win.

With the InnoDB Plugin installed, next up is tuning the configuration parameters of the database. In general, all of the configuration tuning is done to ensure that appropriate caches, data, indexes, and table-metadata all remain resident in system RAM rather than requiring the database server to load these from hard disk. For details, see my example my.cnf configuration file with notes as well as these step-by-step tuning instructions from Four Kitchens.

One side note that came up in the workshop was the performance differences between the MyISAM engine and the InnoDB engine. The ‘conventional wisdom’ is that the older, simpler, MyISAM engine is higher performing for read queries than the InnoDB engine since it has less overhead taken up by ensuring data integrity. What was discussed in the workshop is that this supposed edge of the MyISAM engine only actually applies in a tiny subset of cases: where the server is severely memory constrained (such as on small slice of a shared host) or where the data set is massive and cannot be reasonably held in RAM (such as some multi-terabyte reporting databases). Because the MyISAM engine is designed as a file-based engine and the InnoDB engine is designed to hold data in memory, it was said that in cases where much or all of the data and indexes can be held in memory the InnoDB engine will always out-perform the MyISAM engine — even on simple select statements.

Opcode Caching

One performance improvement we already have in place for most of our web applications is to cache compiled PHP ‘opcodes’ in RAM using the PHP APC extension so that PHP scripts don’t need to be read off of the hard disk and compiled each page load.

Having APC enabled also allows very lightweight page-load statistics monitoring to be done with the Drupal Devel-Performance module, giving use summary statistics of the actual page-load times that users are getting from the production webservers.

Note: APC <= 3.0 has an issue where the entire cache is cleared if it becomes full.

Memcache

The second performance improvement that we are already using is to use Memcache and the Drupal Memcache Module to allow cached data to live in memory on the the webservers and not require queries the database server. Since the [MySQL] database server is generally the performance bottle neck and is hard to scale horizontally, even moving simple cache look-ups off of it can be a big performance improvement.

Note: Use the dev version of the Drupal Memcache Module so that one large memory bin (possibly spanning multiple machines) can be used rather than having to separate cache ‘tables’ into multiple bins. The ‘stable’ version of the module didn’t prefix the cache ‘tables’, resulting in excess clearing of cached data when unrelated data was cleared.

PressFlow

PressFlow is a Drupal distribution designed for high performance that has several differences from the main release of Drupal:

- Legacy support for PHP4 is dropped, allowing better performance in PHP5

- Support for databases other than MySQL is dropped, allowing PressFlow to take advantage of higher-performance features than the lowest-common denominator would support

- Rather than being created on the first web request, sessions are only created when data is stored in them. This “Lazy Session Creation” allows proxies like Squid and Varnish to differentiate between anonymous and authenticated traffic and only cache content for anonymous users

- It allows splitting of read-queries to read-only slave databases, taking load off of the read-write master database.

With these changes noted, PressFlow is a drop-in replacement for Drupal Core.

See this page for more information on PressFlow.

Varnish

Varnish is a reverse proxy* that can be run on the web-host in front of the Apache webserver to cache content destined for anonymous users and return that cached data to other anonymous users from memory, without the cached requests ever having to be processed by Drupal code.

* A “Forward Proxy” is a proxy-server that usually sits close to the clients (often at an ISP) and caches requests coming from those clients to the broader internet. Varnish, as a “Reverse Proxy” sits close to the webserver (even on the same machine) and caches requests coming into it from the broader internet.

Since anonymous traffic is often orders of magnitude greater than authenticated traffic, serving pages for anonymous users from a cache before PHP code is even touched can result in huge speed improvements for anonymous users. The cache performance can be as high as ~5,000-10,000 pages per second compared to a max of about 100-300 pages per second for executed Drupal code, even with its internal page caching turned on. Authenticated users get a speed boost as well since the total traffic being processed through the Drupal PHP code drops.

This wiki page has a configuration file for Varnish working with Drupal. The basic idea is that the presence of Google Analytics cookies is ignored for the purpose of determining if the traffic is authenticated or anonymous. If any other cookies are set, then Varnish assumes that the traffic is for an authenticated user and it passes the request through to Apache/Drupal and doesn’t cache the result when sending it back to the client. If no additional cookies are present, then a result from cache is returned if found.

One downside with varnish is that caching is based on Expire headers, meaning that anonymous users may see slightly stale content (often set to be refreshed every 5 minutes or so). The Drupal Varnish module includes options that allow much longer Expire times to be set and pages to be actively cleared from cache when they are changed, but at the cost of additional complexity. Were active cache clearing desired, this would likely involve a lot of testing to ensure that all caches are appropriately cleared in all cases of content change.

Hudson

Hudson is a continuous integration tool that can be used as a kind of cron-on-steroids, allowing nice features like statistics of cron-run execution times and emails sent to admins if cron-runs have failures. It also prevents cron-runs from stepping on each other if previous executions haven’t completed yet.

Other modules and notes related to performance

- Materialized Views

- Boost – Writes out Drupal pages as static HTML. Varnish is a vastly better solution, but not possible on some web hosting providers (not an issue for Middlebury).

- Panels – Can allow caching of blocks and other content pieces even for authenticated users

- Views – Can cache results, just be careful for views where there may be node-access restrictions