Executive summary:

We’ve migrated from core Drupal-6 to Pressflow, a back-port of Drupal-7 performance features. Using Pressflow allows us to cache anonymous web-requests (about 77% of our traffic) for 5-minutes and return them right from memory. While this vastly improves the amount of traffic we can handle as well as the speed of anonymous page-loads it does mean that anonymous users may not see new versions of content for at most 5 minutes. Traffic for logged-in users will always continue to flow directly through to Drupal/Pressflow and will always be up-to-the-instant-fresh.

Read on for more details about what has change and where we are at with regard to website performance.

Background

When we first launched the new Drupal website back in February we went through some growing pains that necessitated code fixes (Round 1 and Round 2) as well as the addition of an extra web-server host and database changes (Round 2).

These improvements brought our site up to acceptable performance levels, but I was concerned that we might run into performance problems if the college ended up in the news and thousands of people suddenly went to view our site.

At DrupalCon a few weeks ago I attended a Drupal Performance Workshop where I learned a number of techniques that can be used to scale Drupal sites to be able to handle internet-scale traffic — not Facebook or Google-level traffic, but that of The Grammys, Economist, or World Bank.

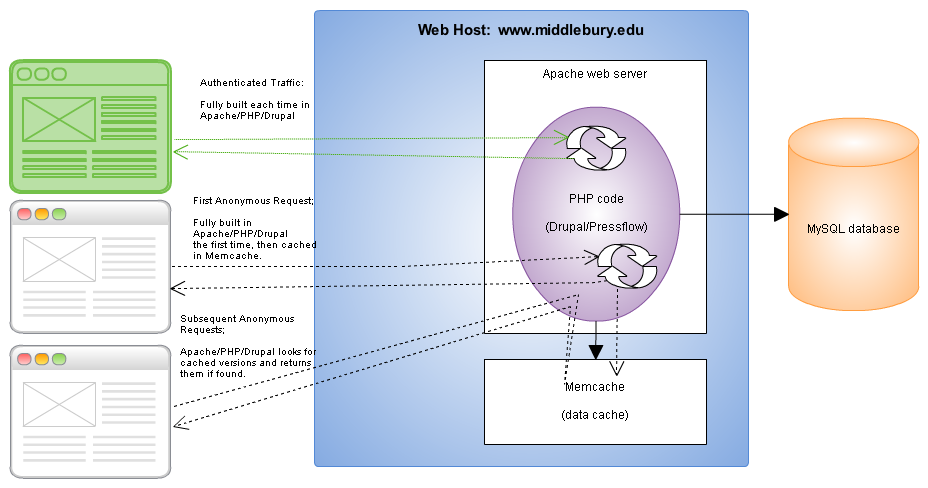

Since before the launch of the new site we were already making use of optcode-caching via APC to speed code execution and were doing data caching with Memcache to reduce the load on the database. This system-architecture is far more performant than a baseline setup, but we still could only handle a sustained average of 20 requests each second before the web-host started becoming fully loaded. While this double our normal average of 10-requests per second, it is not nearly enough headroom to feel safe from traffic spikes.

Request flow through our Drupal web-host prior to May 13th; using normal Drupal page-caching stored in Memcache. Click for full-size.

Switching to Pressflow

Last week we switched from the standard Drupal-6.16 to Pressflow-6.16.77, a version of Drupal 6 that has had a number of the performance-related improvements from Drupal-7 back-ported to it. Code changes in Pressflow such as dropping legacy PHP4 support and using only MySQL enable Pressflow execute about 27% faster than Drupal, a useful improvement but not enough to make a huge difference were we to get double or triple our normal traffic.

For us, the most important difference between Pressflow and Drupal-6 is that sessions are ‘lazily’ created. This means that rather than creating a new ‘session’ on the server to hold user-specific information on the first page each user sees on the website, Pressflow instead only creates the session when the user hits a page (such as the login page) that actually has user-specific data to store. This change makes it very easy to differentiate between anonymous requests (no session cookies) and authenticated requests (that have session cookies) and enables the next change, Varnish page caching.

Varnish Page Caching

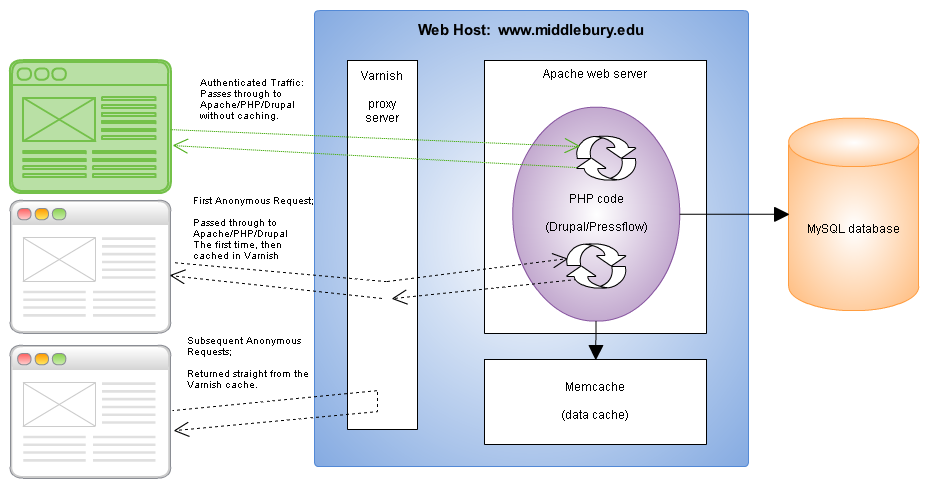

Varnish is a reverse-proxy server that runs on our web hosts and can return pages and images from its own in-memory cache so that they don’t have to execute in Drupal/Pressflow every single time. The default rule in Varnish is that if there are any cookies in the request, then the request is for a particular user and should be transparently passed through to the back-end (Drupal/Pressflow). If there are no cookies in the request, then Varnish assumes correctly that it is an anonymous request and tries to respond from its cache without bothering the back-end.

Request flow through our Drupal/Pressflow web-host after May 13th; using the Varnish proxy-server for caching. Click for full-size.

Since about 77% of our traffic is non-authenticated traffic, Varnish only sends about 30% of the total requests through to Apache/PHP/Drupal: all authenticated requests and anonymous requests where the cache hasn’t been refreshed in the past 5 minutes. Were we to have a large spike in anonymous traffic, virtually all of this increase would be served directly from Varnish’s cache, preventing any load-increase on Apache/PHP/Drupal or the back-end MySQL database. In my tests against our home-page varnish was able to easily handle more than 10,000 requests each second with the limiting factor being network speed rather than Varnish.

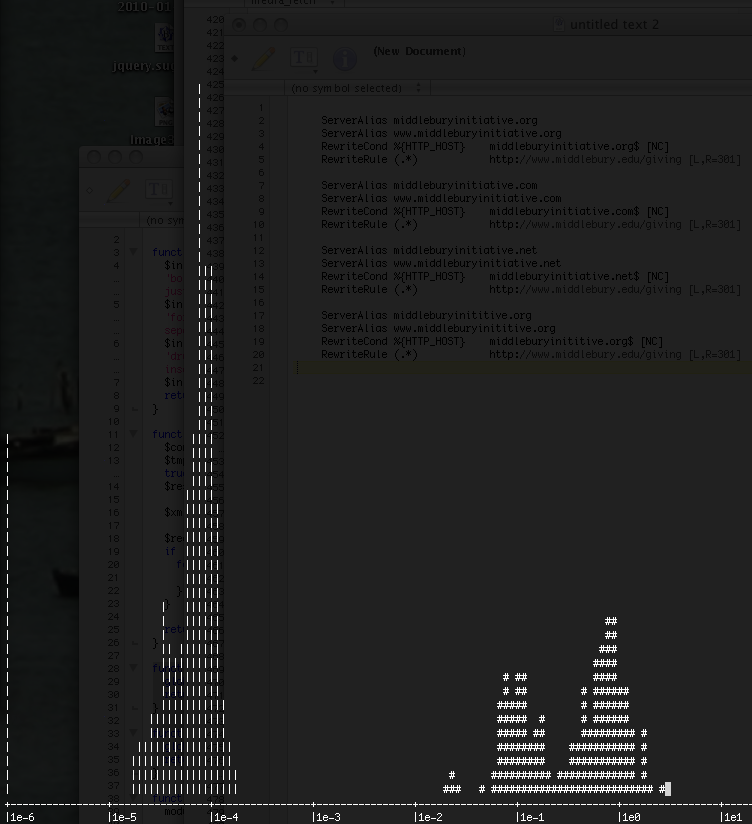

A histogram of requests to the website. Y-axis is the number of requests, X-axis is the time to return requests, '|' requests were handled by Varnish's cache and '#' were passed through to Drupal. The majority of our requests are being handled quickly by Varnish while a smaller portion are being passed-through to Drupal.

MySQL Improvements

During the scheduled downtime this past Sunday, Mark updated our MySQL server and installed the InnoBase InnoDB Plugin, a high-performance storage engine for MySQL that can provide twice the performance of the built-in InnoDB engine in MySQL for the types of queries done by Drupal.

Last week Mark and I also went through our database configuration and verified that the important parameters were tuned correctly.

As the MySQL database is not currently the bottleneck that limits our site performance these improvements will likely have a minor (though wide-spread) effect. Were our authenticated traffic to further increase (due to more people editing for instance) these improvements will be more important.

Where We Are Now

At this point the website should be able to handle at least 20,000 requests/second of anonymous users (10,000 on each of two web-hosts) at the same time that it is handling up to 40 requests/second from authenticated users (20 on each of two web-hosts).

While it is impossible to accurately translate these request rates into the number of users we can support visiting the site, a very rough estimation would be to divide the number of requests/second by 10 (a guess at the average number of requests needed for each page view) to get a number of page-views that can be handled each second. (1)

In addition to how many requests can be handled, how fast the requests are returned is also important. Our current response times for un-cached pages usually falls between 0.5 seconds and 2 seconds. If pages take much longer than 2 seconds, the site can “feel slow”. For anonymous pages cached in Varnish response times range from 0.001 seconds to 0.07 seconds, much faster than Apache/Drupal can do and more than fast enough for anything we need.

The last performance metric that we are concerned with is about the time it takes for the page to be usable by the viewer. Even if they receive all of the files for a page in only 0.02 seconds, it may still take their browser several seconds to parse these files, execute javascript code, and turn them into a displayable graphic. Due to these factors, my testing has shown that most pages on our site take between 1 and 3 seconds for users to feel that our pages are loaded. For authenticated users, this stretches to 2-4 seconds.

Finally please be aware that, anonymous users see pages that may be cached for up to 5 minutes. While this is fine for the vast majority of our content, there are a few cases where we may need to have the content shown be up-to-the-second fresh. We will address these few special cases over the coming months.

Future Performance Directions

Now that we have our caching system in place our system architecture is relatively complete for our current performance needs. While we may do a bit of tuning on various server parameters, our focus now shifts to PHP and Javascript code optimization to further improve server-side and client-side performance respectively.

One big impact on javascript performance (and hence perceived load-time) is that we currently have to include two separate versions of the jQuery Javascript Library due to different parts of the site relying on different versions. Phasing out the older version will reduce almost by half the amount of code that the browser has to parse.

Additional Notes

(1) As people browse the site their browser needs to load the main HTML page as well as make separate requests for Javascript files, style-sheet (CSS) files, and every image. After these have been loaded the first time, [most] browsers will cache these files locally and only request them again after 5 minutes or if the user clears their browser cache. CSS files and images that haven’t been seen before will need to be loaded as new pages are browsed to. For example, the first time someone loads the Athletics page, it requires about 40 requests to the server for a variety of files. A subsequent click on the Arts page would require an additional 13 requests, while a click back to the Athletics page would require on 1 additional request as the images would still be cached in the browser.